Micron’s Rebellion: 'DRAM Three Kingdoms' Shaken by SOCAMM Commercialization

Input

Modified

Micron Seizes the SOCAMM Market in Partnership with NVIDIA Samsung Strikes Back with CXL and GDDR7 The Era of Customized Memory by Application and Chipset Has Arrived

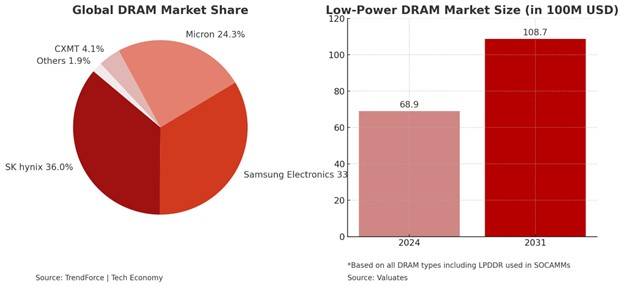

The global memory industry is undergoing a seismic shift. For years, the battlefield was clearly drawn—Samsung Electronics and SK hynix reigned supreme, their rivalry centered around the high-performance High Bandwidth Memory (HBM) technology. These two South Korean giants shaped the direction of DRAM innovation, locking in market dominance. But in a move that may permanently alter the power structure of this so-called "DRAM Three Kingdoms," U.S.-based Micron Technology has entered the fray with a formidable new weapon: the SOCAMM (Small Outline Compression Attached Memory Module).

Micron’s announcement of SOCAMM’s commercialization not only upends the HBM-centric status quo but also introduces a radically different memory architecture into mainstream application. The timing is critical—artificial intelligence, large-scale computing, and real-time data processing are pushing conventional memory systems to their limits. Now, as industry demands diversify and technical bottlenecks multiply, the lines of competition are redrawn. In response, Samsung is deploying its CXL (Compute Express Link) memory to rebuild performance architecture, while also fast-tracking GDDR7 for graphic and inference-heavy applications. The battle for AI memory dominance has never been more complex—or more consequential.

SOCAMM and the Rise of a New Memory Architecture

Micron’s SOCAMM isn’t just an upgrade—it represents a conceptual shift in memory architecture. Scheduled to debut in NVIDIA’s next-generation AI accelerator GB300, SOCAMM will be deployed alongside HBM, creating a dual-memory structure tailored for the most demanding workloads. The GB300 platform combines NVIDIA’s Grace CPU and Blackwell Ultra GPU, designed for AI training, inference, and cloud datacenter workloads. When NVIDIA initiated the project, it tapped all three DRAM titans—Samsung, SK hynix, and Micron—to develop viable SOCAMM modules. Micron was the first to secure approval for mass production, a symbolic coup in the high-stakes memory war.

So, what makes SOCAMM revolutionary? Unlike conventional DRAM modules that connect through longer and more layered memory interfaces, SOCAMM links directly to the CPU or GPU, drastically reducing the distance and barriers between processor and memory. This architectural proximity eliminates data transfer bottlenecks and enables ultra-high-speed throughput, which is essential for high-performance computing (HPC), real-time video analytics, LLM (large language model) training, and autonomous vehicle systems.

In practical terms, SOCAMM allows the memory to sit next to the chip, processing data with minimal latency—solving a longstanding issue in conventional DRAM where physical distance and bus congestion limit performance. Furthermore, it operates with greater energy efficiency, superior heat dissipation, and lower power draw, making it an attractive option for data centers, edge computing environments, and AI server clusters that are increasingly sensitive to both performance and operational cost.

Another major advantage is its modular and flexible design. SOCAMM is compatible with existing motherboard infrastructure, unlike HBM, which requires specific packaging and thermal configurations. It combines cost-effectiveness with competitive performance, carving out a strategic "middle ground" in a market that often forces a choice between premium-grade performance and affordability. This makes SOCAMM ideal for a multi-tiered AI ecosystem, where not every workload demands HBM-class performance but still requires higher speed and reliability than standard DDR5.

Micron is leveraging its unique position—being able to produce both SOCAMM and HBM3E concurrently—to cover a broader swath of the memory demand curve. While Samsung and SK hynix remain focused on advancing HBM density and efficiency, Micron is betting on portfolio diversification to gain market share early. Should SOCAMM move from pilot projects to large-scale deployment, the memory market will shift from a single-tech dominance to a coexistence of heterogeneous architectures—each optimized for a different class of compute demand.

Samsung’s Strategic Pivot: Betting on CXL and GDDR7

In the face of Micron’s disruptive momentum, Samsung Electronics is doubling down on innovation—not by escalating the HBM arms race, but by pushing forward with CXL (Compute Express Link) memory technology. CXL is a next-generation high-speed interconnect that enables dynamic memory sharing between CPUs, GPUs, and other accelerators. It provides faster and more flexible data exchange, tackling one of the most persistent pain points in AI and HPC architecture: bandwidth bottlenecks.

Samsung envisions CXL as a central component of its future server ecosystem. Unlike traditional memory constrained by physical slots and rigid configurations, CXL memory allows modular and scalable connections across diverse compute resources. This flexibility is ideal for AI servers and cloud data centers, where workloads and performance needs change rapidly. CXL also supports memory pooling and tiering, which means systems can allocate memory dynamically depending on demand—boosting utilization rates and reducing idle resources.

Although the CXL market is still in its infancy, Samsung has made early and aggressive investments, positioning itself to lead as adoption scales. The company isn’t just developing memory modules—it’s attempting to reimagine the architecture of server infrastructure itself, separating memory from compute units and interlinking them in more efficient ways. While SK hynix and Micron continue refining performance in traditional memory formats, Samsung is exploring how to overcome the limitations of the motherboard itself.

At the same time, Samsung is preparing for the commercial rollout of GDDR7, a next-gen high-speed memory optimized for graphics and inference accelerators. GDDR7 outpaces GDDR6 by 1.4 times in speed and improves power efficiency by over 20%, making it indispensable for high-end gaming GPUs, AI graphic accelerators, and ultra-high refresh rate displays. Rather than promoting GDDR7 as a standalone solution, Samsung is integrating it into a modular strategy—pairing it with CXL and DDR5 to deliver custom-configured memory packages based on performance demands.

This strategic pivot—moving from single-tech competition to multi-tech coexistence—marks a profound shift in Samsung’s playbook. In a market fragmenting by application type, a singular memory solution is no longer viable. Samsung’s diversified portfolio aims to cover the entire performance spectrum, from ultra-high bandwidth to mainstream and specialized workloads.

A Fragmenting Market and the Road Ahead

The era of one-size-fits-all memory is over. As AI continues to evolve into various verticals—autonomous vehicles, generative AI models, edge analytics, immersive media—the memory needs of each workload diverge. What was once a unified race to build the fastest DRAM has turned into a multi-lane highway of innovation, with each manufacturer specializing in distinct directions. The DRAM "Three Kingdoms" are no longer battling for dominance over a single territory but are now mapping out new regions to claim.

This fragmentation presents both operational challenges and financial opportunities. Semiconductor companies must now maintain a wider product range, adapt to multiple interfaces, and support varying performance tiers—all while managing manufacturing cost and yield. However, the upside is considerable. Diversified demand creates new revenue streams and cushions companies from the volatility of any single product category.

Samsung’s recent commercial wins suggest that its diversified strategy is already paying off. The company was selected as the main supplier for NVIDIA’s flagship GeForce RTX 50 series and for the RTX PRO 6000D (B40), a budget-friendly AI accelerator tailored for the Chinese market. These contracts signal a strong rebound in profitability, particularly as AI demand spreads beyond hyperscale servers into consumer and enterprise-grade applications.

According to Kim Dong-won, a senior analyst at KB Securities, the increase in AI server deployments across industries is fueling demand for varied AI semiconductors, which in turn lifts the broader DRAM market. He notes that Samsung will increasingly benefit as the market shifts from a narrow HBM focus to a wider embrace of general-purpose memory solutions, including GDDR and DDR.

In the unfolding narrative of the DRAM Three Kingdoms, Micron’s SOCAMM has clearly opened a new front. Samsung’s push for architectural transformation through CXL, along with its upcoming GDDR7, marks a robust counteroffensive. SK hynix, meanwhile, continues to hold the crown in HBM supremacy—for now. But as memory technologies diverge to meet diverse computing needs, the future of this industry will not belong to a single ruler, but to those most adept at adapting to a multi-architecture world.