Apple’s Smart Glasses Ambition: The Next Leap in Wearable AI

Input

Modified

Signals full-scale development of proprietary wearable devices Expanding the product lineup to include AI servers and next-generation MacBooks CEO Cook views this as a strategic turning point for Apple

In the fast-evolving world of consumer technology, a quiet revolution is underway—one that moves computing away from our hands and pockets and places it seamlessly on our faces. This isn’t science fiction or speculative futurism. It's Apple's next major bet.

After defining the smartphone era with the iPhone, Apple is now charting a bold course toward wearable artificial intelligence. At the center of this strategy is the company’s highly anticipated smart glasses—an ambitious project combining custom silicon, advanced AI capabilities, and a lightweight form factor that aims to redefine the way humans interact with technology.

Apple’s smart glasses are not merely a peripheral. They are designed to be a cornerstone of the company’s post-iPhone era—a mainstream, intelligent wearable that brings computing into the line of sight. More than a display device, these glasses aim to understand, assist, and respond to their user and environment in real time. And they’re being built with unprecedented attention to power efficiency, AI integration, and user privacy.

With mass production targeted for as early as 2027, this is not just another tech product. It’s a strategic pivot that could reshape the consumer hardware market—and Apple’s role within it.

A Strategic Pivot Fueled by Custom Silicon

Apple’s roadmap to the smart glasses launch is being paved with silicon—custom silicon. Since 2020, Apple has been moving away from third-party chip suppliers like Intel and Qualcomm, instead building its own processors that offer greater control over performance, efficiency, and vertical integration. Now, that same strategy is at the core of its wearable ambitions.

According to a recent report by Bloomberg, Apple’s silicon design team has made significant progress on a new low-power chip specifically engineered for smart glasses. Based on the architecture of the Apple Watch’s energy-efficient chip, this new processor is being developed to control multiple cameras, run AI tasks, and sustain wireless connectivity—all while maintaining minimal energy usage to preserve battery life in a lightweight device. The custom chip is tailored to meet the unique requirements of face-worn wearables, and it represents a fusion of miniaturization, power management, and machine learning optimization.

Internally codenamed N401, the smart glasses are one of the most closely held projects inside Apple. Bloomberg’s sources suggest that the company is aiming for mass production between late 2026 and 2027, with testing and prototyping already well underway. Importantly, the glasses are being developed not as a companion device but as a standalone computing interface. They are expected to offer core functions such as voice control through Siri, real-time photo and video capture, audio playback, and hands-free calls. There are also suggestions that Apple Intelligence—Apple’s own suite of AI features—could be deeply embedded into the glasses, enabling context-aware assistance akin to an on-the-go personal assistant.

Apple CEO Tim Cook is reportedly pushing the project forward with intense focus. Several insiders have described his interest in the glasses as bordering on “obsessive,” with the device envisioned as the iPhone’s spiritual successor. In Cook’s view, Apple’s long-term future will be defined by ambient, always-available computing—technology that doesn’t require a screen in your hand but lives quietly and usefully on your body.

This vision extends beyond the glasses themselves. Apple is currently working on a number of other chips, including “Nevis” for camera-enabled Apple Watch models and “Glennie” for AirPods that incorporate camera modules. These developments hint at a unified ecosystem of intelligent, interconnected wearables.

The company’s roadmap also includes a custom modem lineup, with the C1 chip slated for the iPhone 16e, followed by a higher-end C2 in 2026 and an ultra-premium C3 in 2027. On the computing front, new M6 (Komodo) and M7 (Borneo) chips for iPads are in development, alongside a high-performance chip named “Baltra” for Apple’s future AI servers. All of these processors are part of a single narrative: Apple wants to own the full stack—from silicon to services—especially as it ventures into the new frontier of wearable intelligence.

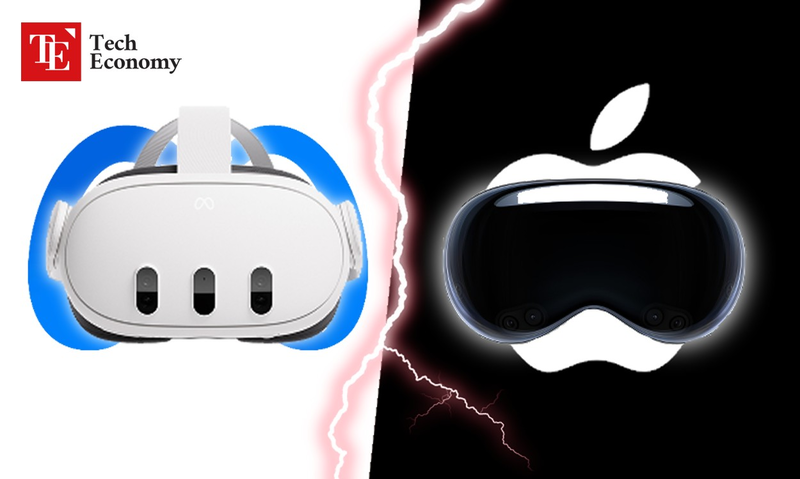

Rivals Push Innovation in the Smart Glasses Race

Apple’s entry into the smart glasses race is timely. The wearable AI landscape is already heating up, with major tech firms jockeying for dominance in what many see as the next great consumer platform.

Meta, which already leads the smart glasses segment with its Ray-Ban Stories line, is preparing to launch two next-generation models in 2025. These will feature enhanced AI capabilities, most notably “Super Sensing”—a suite of features that includes facial recognition and real-time environmental scanning. Super Sensing is part of Meta’s broader Live AI initiative, which aims to offer continuous AI interaction rather than the current 30-minute window available on its existing smart glasses. The new models will be able to scan faces, identify individuals, and assist with contextual awareness—an advancement that brings augmented cognition closer to reality.

Meta’s strategy is centered on embedding its AI tools directly into daily life through fashion-conscious, highly capable devices. The company has already proven that consumers are willing to wear smart glasses if they are comfortable, stylish, and useful. Now, Meta is doubling down on intelligence and interaction, hoping to widen its lead before Apple’s glasses reach the market.

But Meta isn’t Apple’s only competition. Amazon, too, has made a strategic entrance into the space. Through its Alexa Fund, Amazon has invested in IXI, a Finnish startup developing smart glasses with autofocus functionality. These glasses are engineered to help users with presbyopia and other vision impairments by automatically adjusting the focus of the lenses. IXI recently closed a €32.2 million Series A funding round, which also included backing from Eurazeo and the Finnish state VC, TESI.

Amazon’s interest in IXI goes beyond eyewear innovation. Analysts believe the e-commerce giant sees IXI’s technology as a natural extension of the Alexa ecosystem. Imagine glasses that not only adjust focus automatically but also respond to voice commands, offer schedule reminders, or display notifications directly in the user’s field of vision. By integrating Alexa into smart glasses, Amazon could offer a uniquely intuitive and accessible experience—especially for aging populations or people with visual limitations.

This three-way race—between Apple’s design-first innovation, Meta’s AI-driven ambition, and Amazon’s smart home synergy—marks a new battleground in tech. Each company is approaching the challenge from a different angle, but the destination is the same: wearable devices that merge intelligence, interaction, and ubiquity.

Redefining Personal Technology Through Wearable AI

The implications of Apple’s smart glasses extend far beyond the device itself. They represent a redefinition of personal technology—one where intelligence is embedded into the objects we wear, and computing becomes both invisible and indispensable.

Unlike smartphones, which require manual engagement, or smartwatches, which remain peripheral, smart glasses offer ambient interaction. They sit comfortably on the face and can provide contextual information, audio feedback, and real-time assistance without the need for active touch or constant visual focus. This passive yet intelligent form of computing aligns perfectly with the direction in which personal tech is heading: unobtrusive, anticipatory, and always available.

Apple’s greatest strength in this arena lies in its control over the ecosystem. By designing its own chips, operating systems, and user interfaces, Apple can ensure a smooth, integrated experience across devices. This vertical integration will be essential for the smart glasses to feel natural, responsive, and private. Privacy, in particular, is likely to be a differentiating factor, as concerns about facial recognition and AI surveillance grow. Apple has long positioned itself as a protector of user data, and its smart glasses are expected to reflect that ethos.

Furthermore, Apple’s design language and brand trust could be powerful assets. While Meta and Amazon may push the envelope in terms of raw functionality, Apple is likely to focus on seamless usability, aesthetic appeal, and consumer confidence. If history is any guide, Apple doesn’t aim to be first—it aims to be best.

In that sense, the smart glasses are not an isolated product but a strategic convergence of Apple’s investments in chip design, AI, camera hardware, and user experience. They build upon the groundwork laid by the iPhone, Apple Watch, and Vision Pro—but with the aim of delivering something more casual, more wearable, and more widely adopted.

If successful, these glasses could serve as the first truly mainstream face-worn computer, ushering in an age of personal AI companions that assist users with everything from navigation and reminders to communication and real-time translation.

The year 2027 may seem distant, but it's around the corner in the tech world. The race is on, and Apple’s smart glasses project—driven by custom silicon, CEO vision, and an integrated ecosystem—may very well define the next frontier in how we see and shape our digital lives.