From mouth to mind: Interfaces of internal speech and the end of language as a barrier

Input

Modified

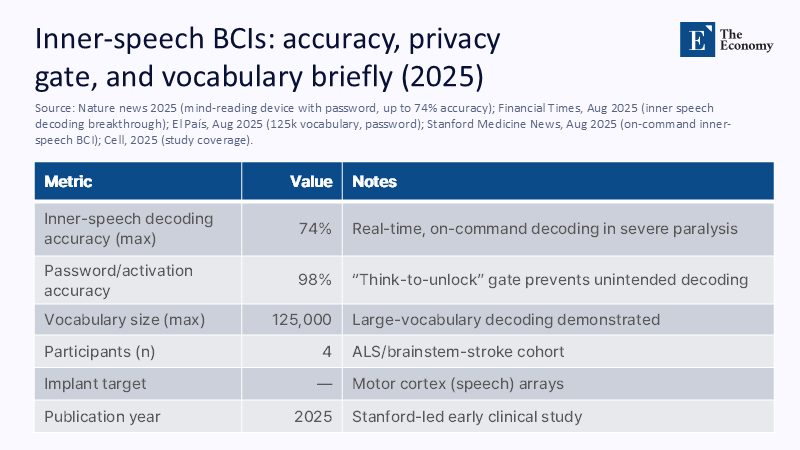

For two decades, we've treated language as a human input/output problem: fingers to type, lungs and lips to speak, and years of training to master a second language. That design hypothesis has just been broken. In August 2025, a team led by Stanford reported a brain implant that decoded "internal speech" — silent, self-generated words — at the command with up to 74% accuracy from a vocabulary of 125,000 words, protected by a thought password that prevented accidental decoding in about 98% of cases. This leap is less critical for clinical innovation than for performance: it shifts communication from muscles to the mind and transforms language from a barrier to a software layer. At the same time, non-invasive decoders have begun to reconstruct continuous language from brain activity that encodes meaning, not just sound, implying translation that could lead to the same semantic substrate. If education is an economy of attention and time, internal speech interfaces are a new general-purpose technology. The policy question is not whether schools and universities will be prepared for this, but how quickly pedagogy, assessment, and safeguards will be redesigned before the interface reaches students' backpacks.

From supportive intent to a general-purpose interface

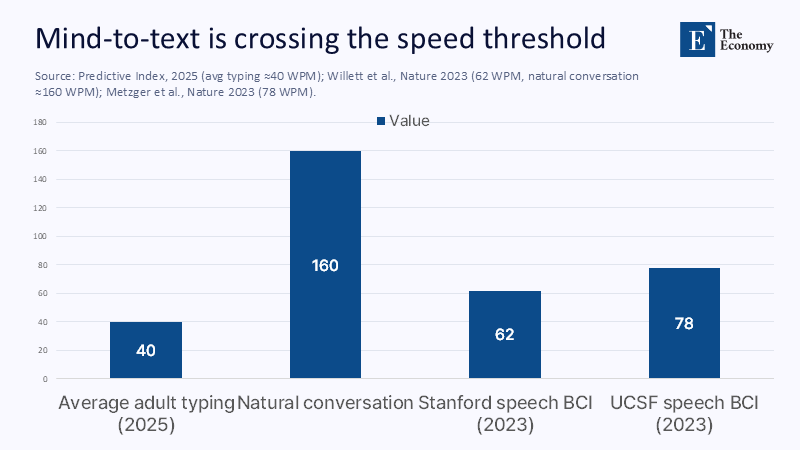

The dominant framework for brain-computer interfaces (BCIs) was clinical: restoration of communication for people who cannot move or speak. This framework remains morally necessary – and the first significant benefits will arise there. But a purely medical lens misses the structural shift that unfolds as the decoding of internal speech becomes practical. Communication is an information theory problem: How fast can we convey the intended message with a tolerable error? When BCIs decode the intended phonemes or entire words directly from neuronal signals, they bypass the mechanisms that limit typing, dictation, and signature. In 2023, two invasive speech neuroprostheses reached 62 and 78 words per minute – already three times the previous record – and the task in 2025 requires real-time decoding of internal speech, removing the fatigue and audio artifacts of "attempted speech". Translate it from clinic to classroom, and you have a new interface that could make note-taking, collaborative writing, and even exam answers silent, hands-free, and fast.

A second reformulation concerns language learning. If decoders target semantic representations – the essence of a sentence of the brain – then the output language may be a rendering option rather than a cognitive limitation. Studies from 2023 onwards show that semantic decoders trained in fMRI can reconstruct continuous language without requiring the subject to say words aloud. New research in bilinguals suggests overlap in brain representations across languages, while in 2025, brain-to-text conductors are increasingly linking decoded semantics to long language models for generations. For education, this means accessible support for multilingual classrooms and students with language problems, if safeguards go hand in hand. But the path is a possibility: set-top boxes remain individualized. Accuracy varies. And not everyone experiences a living "inner voice," a fact that politics must recognize to avoid creating a new inequality.

What the latest figures offer

The past 18 months have redefined the possible. A May 2024 study, summarized in Scientific American, silently decoded imaginary words from a neuron's activity in the extracellular helix, reaching an accuracy of 79% in one participant in a small vocabulary and showing common neurons between internal and vocal speech. In August 2025, a new Stanford paper described the decoding of internal speech by motor cortex arrays with a command accuracy of up to 74%, a vast vocabulary, and a password that prioritizes privacy. These systems differ from the 2023 "speech BCIs" that required attempts at vocal tract movements. Internal speech systems work when users think of the word. Together, these trajectories imply convergence: higher-density electrodes improve phoneme decoding. Machine learning models stitch phonemes into words. And semantic decoders provide scaffolding when the audio signals are noisy. The result is a reasonable path to fluent, silent production.

There's also the issue of money. Elon Musk's Neuralink has become a powerful accelerator for both hardware and the public's imagination, raising $280 million in 2023 and an additional ~$600-650 million in 2025 at a valuation of $8.5-9 billion, after the first in-person demonstrations showed cursor control and typing from an implanted device. Whether or not Musk directly funds internal speech research, this chapter compresses the timelines for implantable, high-channel wireless devices – the prerequisites for broader adoption. Investment is not destiny; Safety issues, threat withdrawal, and long-term stability remain open. But when capital, capacity, and clinical milestones coincide, ecosystems change. Education leaders should assume that hardware won't be the barrier until their following five-year technology plans mature.

Performance, not hype: What faster "thinking in text" means in practice

Speed matters because modern education is built on time boxes. Standard adult typing averages 40-60 words per minute. High performance can maintain 80+. Stenographers reach 100+. In 2023, invasive speech BCIs accounted for 62-78 wpm in laboratory settings for severely paralyzed participants, progressing to physical conversation at ~160 wpm. If internal speech systems maintain comparable or higher rates without breath control or muscle fatigue, students who "type all day" could regain hours each week. The benefits are not only in speed but also in cognitive load: silent synthesis while maintaining eye contact, laboratory work, or instrument handling can deepen engagement. The caution is that the high wpm numbers are still limited to research platforms, with individualized training and expert groups. Even so, the direction is clear enough to warrant pilots in auxiliary shelters now.

Multiple analyses – McKinsey remains the norm, repeated in the 2024-2025 workplace data – estimate that about a quarter to a third of the knowledge-working time is spent on email and written coordination. A conservative scenario: if a student or researcher produces 2,000 words per day at 50 wpm, internal speech output at 100-150 wpm could cut the time in half. Composition. Add hands-free shooting during lab or field work, and the results are more complex. None of this requires the abandonment of the teaching of writing. It requires a didactic structure, argument, and use of sources in a world where transcription is cheap. The congestion moves from pressing the keys to thinking clearly, organizing elements, and stating well – ironically, the classic goals of education.

Language learning, redefining around semantics

The allure that "we may not need to learn a new language" is based on whether decoders can extract meaning independent of language. The evidence is growing. UT Austin's 2023 Semantic Decoder reconstructed continuous language from fMRI by mapping brain activity to semantic integrations. The 2024-2025 studies report thematic and even multilingual semantic alignment that improves perceptual decoding of verbal and visual scenes. In bilingual samples, the standard representational structure appears alongside traces of a specific language, suggesting that a notion-trained decoder could render results in any target language through a language model. In classrooms, this would look like live caption or note-taking in a student's preferred language without the stigma or time cost of changing the details. It will also support students with aphasia or inactivity by bypassing disturbed motor speech pathways.

However, "thinking is universal" promises too much. Semantic decoders require extensive per-user calibration, are sensitive to attention and cooperation, and today require scanners or implants. Non-invasive systems are bulky and slow. Invasive systems are surgical and limited to severe disability. Not everyone mentions an intense internal monologue, and cultural-linguistic diversity shapes the way concepts are rehearsed internally. Therefore, teaching must keep language learning at the centre while using internal speech tools as scaffolding: vocabulary and grammar continue to unlock literature, nuance, and civic engagement. The fundamental transformation is not abandoning languages, but teaching students to work in them – using decoders to speed up comprehension, translation, and writing practice, while protecting against dependence and misuse.

The Risk Architecture: Privacy, Consent, and Order

Internal speech BCIs pose a unique risk: misinterpretation or exposure of thoughts that were never meant to be expressed. The 2025 Stanford project proactively added a cognitive "password" that must be silently imagined before decoding is involved, precisely to avoid accidental leakage. Law and policy are beginning to catch up. The EU Artificial Intelligence Act came into force in August 2024 with a phased implementation; Among its first bans, it prohibits the drawing of sentimental inferences in education and sets high-risk requirements for systems used in schools. In Latin America, Chile's 2021 constitutional neurorights have already yielded a Supreme Court ruling ordering the deletion of commercially collected neural data, and the 2025 scholarship converges on mental privacy as a separate right. Educational developments must be based on this trajectory: neuronal data are specific. Consent must be detailed and revocable, and "off by default" should be a hard requirement, not a checkbox.

Equity is the twin risk. Early systems will be expensive, personalized, and, for invasive versions, medically limited. If accommodations are offered to some students and not others, comparability fractures of assessment. Policies should follow adoption through disability services, combine each device with non-neural alternatives that achieve similar learning goals, and enforce local processing with rigorous data minimization. Teachers will also need professional development for a new silent collaboration etiquette: when students can "talk" with thought, the temptations of surveillance will increase; however, the AI law's ban on drawing emotion inferences in classrooms should mark a bright red line. Institutions must publish data retention schedules measured in hours, not semesters, and require periodic audits by third parties. Without these teeth, we risk sleepwalking into an export pedagogy.

A blueprint for teachers and policymakers

Start with accommodations that resolve obvious pain points. For students with dysarthria, ALS, or locked-in syndrome, internal speech BCIs promise less fatigue and faster expression than speech attempt decoders. Pilots should combine clinical teams with assistive technology offices, run participation tests with explicit collection of thinking passwords, and publish learning outcomes along with error profiles. They should prefer systems that keep raw neural data on the device and store only text output, ideally in open formats that are integrated into learning management systems. The goal is not to adopt gadgets but to improve learning – fewer transcription hours, more time spent analyzing, questioning, and reviewing.

Second, redesign the assessment around ideas, not keystrokes. If the internal speech output achieves 60-100+ wpm for some users, closed-end exams that reward the rapid transcription of memorized facts will reward access to the interface. Teachers should lean towards open-source, process-oriented assessment: argument maps, oral defenses, source triangulation, and timeless portfolios. For language courses, reverse the risk: require students to show how esoteric speech translation helps with comprehension, while also demonstrating grammatical control and cultural knowledge. For industries that are heavy on writing, teach direct engineering for decoders the way we once taught typing exercises – always with a parallel course in bias, privacy, and failure modes. Institutions that make these changes early on will stabilize expectations before the material becomes mainstream.

The economic case of schools and systems

Even close adoption can pay off quickly. Knowledge workers, including students, spend about a quarter to a third of their day on email and written coordination, a pattern that has been documented repeatedly over the past decade and confirmed in the 2024-2025 Work Trends Report. If internal speaking interfaces cut composing time for targeted tasks in half, this time flows into feedback, experimentation, and peer review. For cash-constrained systems, prioritize internal speech pilots where time is most valuable: lab notebooks, clinical rotations, fieldwork, and special training. Track not only speed but also comprehension and equality: do students understand more, faster, or produce more text? Investments should be modest and gradual, with sunset clauses if no profits materialize. It's about buying attention, not chasing a title.

Private equity will continue to push the boundaries. Neuralink's valuation jump and multi-hundred-million-dollar rounds have energized implantable hardware efforts, while non-invasive lines — from generic semantic decoders to fMRI conduits in text — are attracting academic industry consortia. Education must capitalize on this wave without ceding governance. Public interest test beds will. They could set open telemetry standards, publish negative results, and insist on interoperability to prevent schools from being locked into individual vendors. If we treat internal speech BCIs as we dealt with the first laptops – first auxiliary, then separate, finally ubiquitous – we can avoid the cycle of hype and sadness that haunts ed-tech. The key is to commit early on to principles that preserve self-efficacy and dignity while accelerating access to ideas.

Congestion is moving, and policy must move with it

The impressive number is not only 74% accuracy or 125,000 words; it is the disappearance of muscles from the communication loop. When words are no longer waiting for fingers or breathing, language ceases to be a barrier and becomes a routing layer. This will free students who have long been excluded from slow transcription, motor fatigue, or second-language barriers. It will also entice institutions to monitor where they need to be housed and to incorporate more text to enhance learning. The correct answer is the realistic ambition: to use internal speech tools first, where the disability gap is repaired; Write protective railings that put consent and deletion above curiosity. Review evaluation for thinking, not performance. And we teach language as a cultural technology, even when we translate at the speed of thought. Education has always been about turning attention into understanding. Internal speech interfaces make attention more readable to machines. Our job is to ensure that readability serves students – quietly, quickly, and on their terms.

The Economy Research Editorial

The Economy Research Editorial is located in the Gordon School of Business and Artificial Intelligence, Swiss Institute of Artificial Intelligence.

References

Blackrock Neurotech. (2023, August 23). BCI speech achieves 62 WPM. https://blackrockneurotech.com/ (summary of nature's effects).

European Commission. (2024–2026). Timeline of implementation of the AI law. Retrieved 2025-08-15.

EU Artificial Intelligence Act (unofficial website summarising Article 5). (N.D.). Prohibited Artificial Intelligence Practices. Retrieved 2025-08-15.

Financial Times. (2025, August 15). Scientists are developing a brain implant capable of decoding internal speech.

Hasson, E. R. (2025, August 14). A new brain device is the first to read internal speech. Scientific American.

Jamali, M., et al. (2024). Semantic encoding in language understanding in the analysis of a neuron. Nature.

McKinsey Global Institute. (2023). The Economic Potential of Genetic Artificial Intelligence. (Repeats previous email/search time findings.)

Metzger, S. L., et al. (2023). A high-performance neuroprosthesis for speech decoding. Nature. (Open Access Overview).

Naddaf, M. (2024, May 16). Device decodes "internal speech" in the brain. Scientific American. (Nature Human Behavior Coverage). Neuralink. (2025, June 2). Neuralink raises $650 million Series E. Company Update.

Predictive indicator. (2025, January 28). What is a good WPM for typing? (Benchmarks for adult typing speeds).

Reuters. (2025, May 27). Musk's Neuralink raises cash at a valuation of ~$9 billion.

Stanford Report. (2025, August 14). Scientists are developing an interface that "reads" thoughts from patients with speech problems. (Interview, password findings, and internal speech).

Tang, J., et al. (2023). Semantic continuous language reconstruction by non-invasive fMRI. Nature Neuroscience.

The Times (London). (2025, August 15). The brain chip could translate thoughts into speech (125k vocabulary, 74% accuracy, 98% password activation).

Wandelt, S. K., et al. (2024). Representation of internal speech by individual neurons (coverage and measurements). Coverage of human behavior of nature through Scientific American.

Willett, F. R., et al. (2021). High-performance brain-to-text via fantastic handwriting (frame for speed record, widely reported in 2023-2024 coverage). Nature.

Wired. (2023, August 23). Brain implants that help paralyzed people speak have just broken new records (62 and 78 wpm).

Global Privacy Forum / Forum on the Future of Privacy. (2024, March 20). Privacy and the rise of neuroscience in Latin America (2023 decision on neuroprivacy in Chile).

Comment