Pattern machines in logical order: Why the "victories" of the Artificial Intelligence Olympiad must reshape, not replace, reasoning

Input

Modified

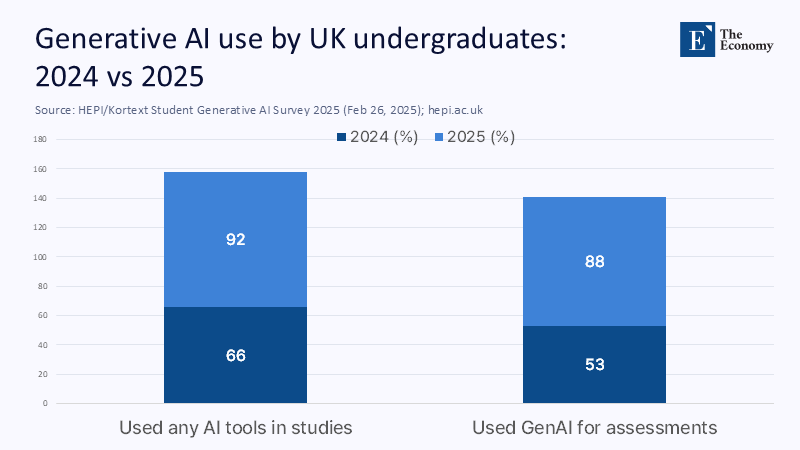

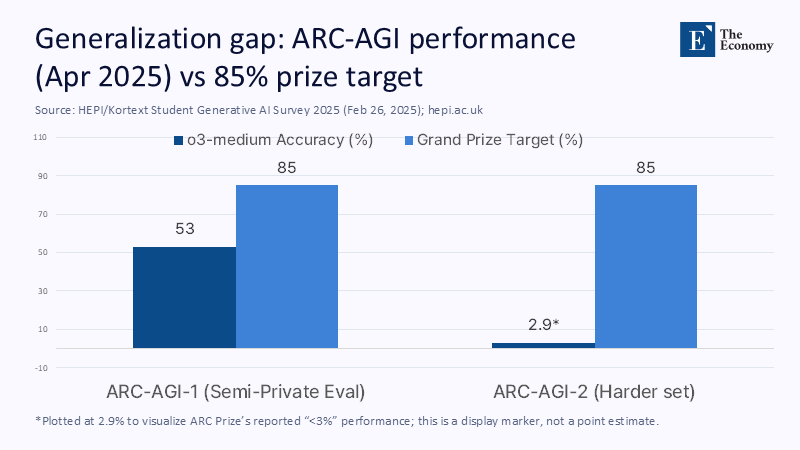

In July 2025, Google DeepMind reported that the Gemini "Deep Think" system solved five of the six problems of the International Mathematical Olympiad for 35/42 points - gold medal level from the competition's scoring rubric. This is not just a feat of technology. It is a testament to the potential of artificial intelligence to inspire admiration and curiosity, sparking new ideas and approaches in education. However, in the same year, the ARC-AGI benchmark – explicitly designed to measure generalisation to new tasks – remains unresolved, with state-of-the-art performance hovering around 50%, far from the 85% target set by the organisers. Both statements are real. Together, they describe the current limitations of artificial intelligence: it excels at problems that can be formalized against models and precedents, but struggles with tasks that require innovation and deductive leaps. In classrooms already flooded with productive tools — over 90% of students report using them — the policy question is no longer whether AI can deliver. It's how to teach about logic when machines are, at their core, pattern machines.

Redefining the question: From "Can AI solve problems?" to "Can AI create unknowns?"

Public debate creates a false dichotomy: whether machines "think" or not. This framing loses everything that matters to education. The genetic systems that most students are now manipulating are trained to minimize the error of the next token in unimaginably large bodies of text. They interpolate patterns with strange fluency. They are not looking for conceptual innovation. Even when the models publish impressive scores on level-level tasks in the Olympiad, mathematicians point out that these successes travel through narrow corridors: carefully standardized inputs, heavy reliance on existing standards, and systematic search. The achievement is real. The mechanism remains first in the pattern. An educational system based on inference, evidence, and criticism cannot entrust innovation to tools that are, by design, statistical imitators. If we want graduates who can create novel problems, we need to teach them to produce and defend structures that no library of standards can offer.

The need for this reform is urgent, as adoption of AI in education has already surpassed political debates. In UK higher education, the proportion of students using genetic AI has risen from two-thirds in 2024 to over nine in ten in 2025, with almost one in five admitting to removing AI-generated text directly in assessments. The rules that once policed plagiarism are inappropriate for a world where students usually co-write with machines. The integrity problem is not just the arrest of fraudsters; it maintains a culture of proof and explanation when help is available. In this world, the decisive political move is not prohibition or uncritical embrace, but redesign: evaluations must reward innovation, coherence, and controlled defense – capabilities less likely to mimic models and more central to trained judgment.

What the latest figures show

Get the iconic results. In 2024, DeepMind demonstrated a silver medal-level performance in Olympiad geometry, solving a live IMO problem after formalization and reporting high success rates on historical geometry sets. In 2025, an advanced Gemini build reportedly achieved a gold-level rating throughout the exam. Nature's coverage earlier in 2025 also saw dramatic advances in theoretically proven systems. Reading along with the skeptical Scientific American editorial a week after the 2025 event, the evidence paints a picture of top-down progress delimited by bottom-up boundaries: when problems can be expressed in forms that fit the search — syntactic structures, unaltered with good fields — machines sing. Where the representation is cluttered or inventive leaps are required, the melody falters. None of this proves that AI "can't think." It shows, more precisely, that today's high scores are products of standardized scaffolding and leverage of standards, not of independent conceptual production. This distinction is a mistake in the curriculum.

Consider the evaluation regimes that have been explicitly created to eliminate dependence on standards. The ARC-AGI benchmark targets fluid intelligence – adapting to new tasks without preparation – and despite the 2024 step-changing improvements, the field is still far from the limit of ambition. The research communities responded by exploring the "generalization of synthesis," showing that even the top models stumble when a task requires a combination of learning skills in unknown ways, and suggesting neurosymbolic hybrids and "System-2" pipelines to narrow the gap. The technical hope is real, and there are promising prototypes. But for politics, the living fact is that generalization remains challenging in tests that have been created to resist contamination by education data or precedent. The closer the schoolwork is to a pattern space, the more help the machine can give. The closer they are to a stranger, the more the teacher matters. This underscores the value of the human crisis in education, even given the potential of artificial intelligence.

Pedagogy under pressure: Urgent need for designing assessments for logic, not for imitation

The first consequence is architecture: move the graded work to objects that capture reasoning, not just results. A receipt portfolio with a history of publications. A verbal defense ("chalk speech") that forces students to reconstruct basic steps and handle confrontational questions. A design note that explains modeling options and ways to fail. These forms highlight the dimensions of process, error analysis, and reasoning where pattern machines are weakest and where human judgment shines. They also align with broader guidance: UNESCO's global framework urges human-centric competences and the validation of knowledge, while the OECD's 2025 agenda pushes systems to rethink "what teachers should teach" when AI is powerful but not generic. Teaching towards explanation is not nostalgia. It's resilience because explanation is what students need to convey to problems that an LLM has never seen.

Second, separate "AI with" tasks, where AI is used as a tool, from "AI without" tasks, where AI is not involved intentionally. Allow and even require AI for low-risk ideation, quick-code prototypes, or bibliography maps, and then set capstone tasks that are closed by design: invisible datasets explored in supervised labs, handwritten essays, or whiteboard proofs, or problem locations in a timeframe in which students must create and solve problems. Justify a new question before attempting a solution. Early adopters are already moving parts of the curriculum this way — from high schools dropping essays for the sake of supervised writing, to universities testing viva voce pilots in assessment. None of them treats AI as an enemy. It clarifies the division of labor: the model can help with standards. Man must resist reason.

Integrity without collateral damage

Detection alone can't police a world of ambient AI. The numbers explain why, even though an AI handwriting scanner can boast a false positive, on the scale of first-year university writing, equivalent to hundreds of thousands of innocent essays flagged in the United States alone, it is an ethical minefield documented by ed-tech institutions and providers. Research from the mainstream press shows an increase in AI-related misconduct alongside inconsistent enforcement, while policy guidance from universities has warned of over-reliance on unvalidated detectors as of 2023. Integrity strategies based on single-score detectors are at risk of both injustice and trust erosion.

The alternative is evidence at the workflow level. They require audit trails: prompt history, timestamped drafts, and references to sources. Grade for explanation: how a student chose an approach, why they rejected alternatives, and what they learned from mistakes. Use detection as a check, not a verdict – a signal among many that triggers a conversation and, if necessary, a viva. HEPI's 2025 survey shows that students themselves see nuances: most use AI to understand and summarize, not to substitute wholesale, and many are unsure of local rules. A policy that treats students as partners – clear allowances, explicit "no-AI" zones, and authentic assignments – will reduce abuse by giving them a path to learn what the model can't offer: responsible reasoning.

Creating a culture of proof throughout the curriculum

Mathematics is not the only place where proof matters. In economics, a policy note requires reasoned causal assumptions. In biology, a laboratory exposure requires a defense protocol. In history, an argument must survive cross-examination of a primary source. A multidisciplinary culture of proof creates common expectations: setting conditions; making claims; issuing a warrant; and anticipating and responding to a reasonable counterclaim. If AI tools are allowed at any stage, they require disclosure and reflection on what the tool contributed – and what it lost. Over time, students learn to use pattern machines as assistants that lay the groundwork for logic rather than oracles that substitute for it.

To make this a reality, invest in the capacity of teachers. The outlook for the OECD's education policy for 2024 underlines the unpleasant arithmetic: systems need to balance the supply and upgrading of skills simultaneously. Professional development should shift towards designing tasks in an AI-saturated environment: calibrating innovation, writing confrontational rubrics, and delivering fair spoken words. Universities can share banks of "unknown" items — tasks constructed to be unknown but instructive — and alternate them with embargoes to reduce leakage to educational sets. Where resources are limited, start small: one evidence-based assignment per module, one oral checkpoint per term, and a class conference where students present that assignment. Performance is cultural, not just technical: a shared sense that explanation is the currency of learning.

A research and policy agenda for teaching with – and past – machine models

Schools should not stand idly by while laboratories chase generalization. The synthesis literature shows why "few skills" do not automatically compose. When tasks require a combination of sub-skills in invisible ways, performance collapses unless models are scaffolded with structure or training distributions are surgically adjusted. Promising directions – urging skills in the context, clear tool-use diagrams, and neurosymbolic hybrids – may narrow the gap. For policy, these are opportunities to create research-practice partnerships: classrooms as testing grounds for methods that prioritize symbolic structure, transparency, and test-time reasoning. The question for teachers is not whether a future system will "really think," but how to organize today's learning tools without conceding intellectual advantage.

Standardisation bodies and ministries can consolidate this agenda. UNESCO's guidelines – updated in 2025 – constitute human-centric development and validation of knowledge. The OECD update for 2025 calls for a gradual revision of curricula in the context of powerful artificial intelligence. Top benchmarking efforts are pushing toward third-party audits and two-way listening evaluation. High-level signals with local pilots: They require explainability artifacts for each AI used in the assessment. They publish segmental "AI maps" that tell students where tools are allowed. Equal resources, so access to help does not track privileges. As general-purpose models creep toward tighter benchmarks, the competitive advantage of education remains what it has always been: cultivating minds that can produce, not just recognize, role models.

Innovation as a learning goal

Suppose there is an event that determines the moment. In this case, it is the coexistence of gold-level scores in official Olympiad problems and persistent failure at benchmarks aimed at capturing adaptability to the unknown. Pattern machines are amazing instruments. They are not yet generators of genuine innovation. The job of education is to keep innovation at the center: to teach students to ask sharp questions, defend extraordinary claims, and change their minds for reasons. This requires assessments created for explanation, policies that reward the process, and a culture of proof that travels across all industries. It also requires humility about scouts and honesty about help. We can let machines speed up our work where patterns dominate—abstracts, contours, stereotypes—and continue to insist that the human voice conveys the argument. In this attitude, the headlines of the Mathematical Olympiad do not pose a threat to school education. It is a reminder of the purpose of school education. We don't teach to beat an LLM. We teach to think beyond the template library.

The Economy Research Editorial

The Economy Research Editorial is located in the Gordon School of Business and Artificial Intelligence, Swiss Institute of Artificial Intelligence.

References

ARC Award. (2024, December 5). ARC Award 2024: Technical Report. arXiv/ARC Award.

Castelvecchi, D. (2025, February 7). DeepMind AI crushes difficult mathematical problems on par with top human solvers. Nature New.

Deep mind. (2024, July 25). Artificial intelligence achieves the silver medal standard in solving the International Mathematical Olympiad. DeepMind Blog.

Deep mind. (2025, July 21). The advanced version of Gemini with Deep Think officially achieves the gold medal at the International Mathematical Olympiad. DeepMind Blog.

Freeman, J., et al. (2025, February 26). Student Generative AI 2025 Survey. HEPI / Kortext.

He, C., Luo, R., Bai, Y., et al. (2024). OlympiadBench: A challenging benchmark for advancing AGI with bilingual multimodal Olympiad-level scientific problems. arXiv.

Li, Z., et al. (2024). Understanding and Correcting Synthetic Reasoning in Large Language Models. ACL 2024 findings.

MIT Sloan EdTech. (N.D.). When AI Makes Mistakes: Addressing AI Illusions and Biases. It was recovered in 2025.

OECD. (2024, November 25). Educational Policy Perspectives 2024. OECD Publications.

OECD. (2025, May 23). What should teachers teach and students learn in a future of powerful AI? OECD Publications.

Packaging. (2025, June 9). Hidden costs of detection-only academic integrity solutions. Packback blog.

Riehl, E. (2025, August 7). Artificial intelligence has taken over the Mathematical Olympiad, but mathematicians are not impressed. Scientific American.

Tournitin. (2023, August 16). Instructions on AI detection and why we disable Turnitin's AI detector. Vanderbilt University Teaching Center.

UNESCO. (2023, updated in 2025). Guidance on Genetic Artificial Intelligence in Education and Research. UNESCO.

The Guardian. (2025, June 15). Revealed: Thousands of UK university students caught cheating using AI. The Guardian.

Hour. (2025, January). AI models are getting smarter and smarter. New tests are struggling to make up the difference. TIME Magazine.

Teqnoverse (2025). The illusion of thinking: Artificial intelligence doesn't think, it just fits patterns. Medium.Reddit presents controversies about AI performance and detection (2024–2025).

Comment