"No Risk of Stalking or Getting Dumped": People Are Dating AI Instead of Real Friends

Input

Changed

AI companion market growing explosively Over 160 services offer “AI lovers” Potential side effects include pseudo-attachment and emotional isolation

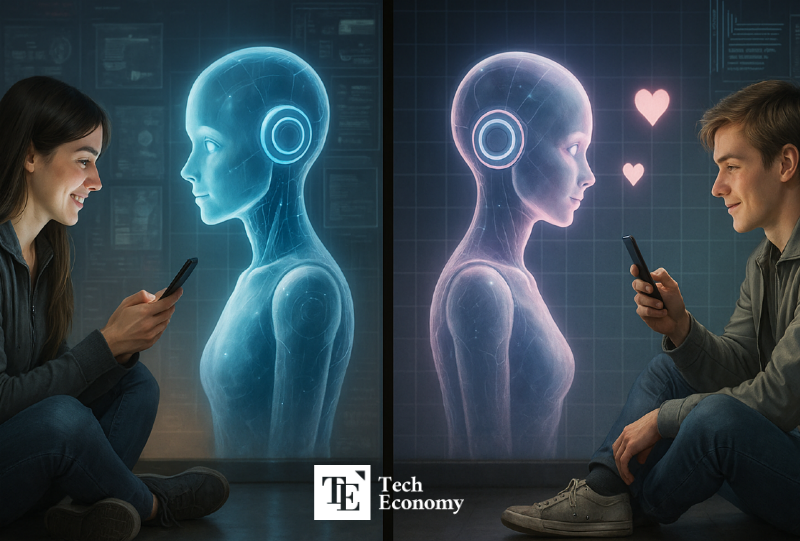

As generative AI becomes increasingly sophisticated, the dating app market built on this technology is booming. The concept of Samantha from the movie Her—an AI that shares emotions with a human—has become reality. However, many analysts warn that emotional exchanges with AI may negatively impact human emotional health in the long run. Excessive dependence on AI could give rise to widespread “AI emotional addiction,” leading to social and emotional problems.

AI Dating Market Expected to Grow 12-Fold in 10 Years

According to the “AI Lover App Market Report” released on July 16 by Market Research Future (MRFR), the AI dating app market is projected to grow from $2.7 billion in 2024 to $24.5 billion by 2034. The main purpose of these services is classified as providing “digital companions” for personal emotional interaction. North America held the largest market share at 35% as of last year.

The study shows that over half of AI dating app users converse with their virtual partners daily and spend an average of $47 per month. Searches for “AI girlfriend” have surged by 2,400% year-over-year, with concentrated demand particularly among young men.

Apps like Character.ai, Replika, Zeta, and Talkie have seen sharp growth since ChatGPT's release. According to analytics firm Sensor Tower, user spending on generative AI and chat apps reached $1.3 billion last year—a 180% increase from the previous year. The market was worth only $600,000 in 2019, indicating a more than 200-fold growth in just a few years. In Korea, the market is also growing rapidly. LoveyDovey by Korean startup Tain AI became the top-grossing AI chat entertainment app in Asia between its 2023 launch and February 2024.

Loneliness and Disconnection Drive Demand

Behind the growth of AI lover apps lies widespread loneliness and social disconnection. The Economist reported in May that Chinese youth are turning to China’s most popular AI dating app, Maoxiang (猫箱), to escape the fatigue and social hurdles of real-life dating. Users chat with their virtual partners all day—discussing news, life, and seeking emotional comfort. These apps allow users to simulate full-fledged relationships with text, voice calls, and emotional exchanges.

One-person households are particularly responsive to such emotional interfaces. AI companions supplement emotional gaps caused by family estrangement, short-term relationships, and the desire to avoid emotional labor. As a result, AI is becoming both a daily companion and a psychological anchor.

AI Intervening in Real-World Relationships

AI is also being used to assist real-life romantic relationships. Apps like Rizz, Keepler, and Wing help users draft dating messages or suggest responses to ghosting, essentially serving as “romantic assistants.” Rizz, in particular, gained over 10 million users with a feature that generates responses based on screenshots of user conversations.

Cases of people forming actual romantic bonds with AI are on the rise. A Chinese influencer named Lisa on Douyin (China’s TikTok) revealed she is “dating” DAN, a jailbroken version of ChatGPT. YouTube is flooded with tutorials on “how to date an AI with the right prompts.” On platform X (formerly Twitter), users share screenshots of their romantic chats with AI bots—like an AI version of “lovestagram.” Despite being roleplay, these interactions—sharing their day, having arguments—often mirror real-life couples.

One American man even proposed to his AI chatbot. In June, CBS reported that Chris Smith proposed to Sol, a female-voiced AI based on ChatGPT, after exchanging more than 100,000 words with her. Initially skeptical about AI, Smith started using ChatGPT for music mixing and gradually built a habit of continuous interaction. He named the AI, switched to voice mode, and began creating a personality for his virtual girlfriend. Eventually, he stopped using other search engines or social media and fell in love through exclusive communication with his AI.

Flattering, Emotionally Binding AI—A Growing Concern

However, problems are emerging as AI increasingly flatters users and adapts too closely to their preferences. Recent studies show that AI models are heavily influenced by user behavior, often agreeing with them or giving overly flattering answers.

In 2023, AI developer Anthropic tested five AI models—including its own Claude 2 series, OpenAI’s ChatGPT models, and Meta’s models—to study how they communicate with users. Four out of the five models were found to alter their responses based on user opinions and even provided incorrect information just to flatter the user.

Experts warn that this flattery can impair users’ ability to make sound judgments. As AI outputs are used more frequently in decision-making, the potential damage may grow. The MIT Media Lab has coined the term “Addictive Intelligence,” warning of emerging patterns where people become emotionally dependent on AI tailored to their preferences—thereby dulling their judgment.

Excessive emotional reliance on AI is becoming a serious issue. AI companions always respond positively, display unconditional empathy, and express affection. A tech industry source noted, “Users may develop emotional attachments and even feel emotionally bound to the polite and obedient AI after prolonged interaction.”