From Marvel to Commodity: Why Large‑Language Models Are Racing Toward Perfect Competition

Input

Changed

This article is based on ideas originally published by VoxEU – Centre for Economic Policy Research (CEPR) and has been independently rewritten and extended by The Economy editorial team. While inspired by the original analysis, the content presented here reflects a broader interpretation and additional commentary. The views expressed do not necessarily represent those of VoxEU or CEPR.

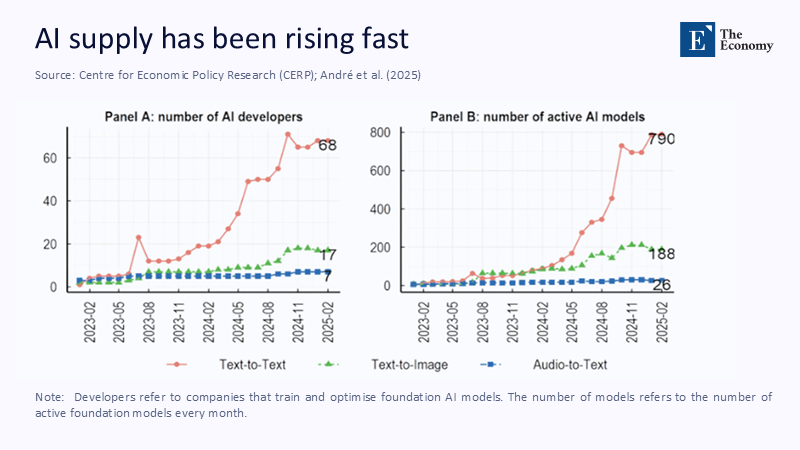

In April 2025, the Herfindahl–Hirschman Index for the global LLM market fell below 1,000 for the first time, plunging from well over 4,500 only eighteen months earlier. The threshold matters: antitrust authorities treat an HHI under 1,500 as “effectively competitive.” Simultaneously, Amazon Web Services slashed the on‑demand price of a single H100 hour to $0.75, while Hugging Face recorded its four‑hundredth openly licensed model upload. Taken together, these figures reveal an industrial sea‑change harder and faster than almost any modern precedent. Airline deregulation took a decade to halve concentration; generative AI has traversed monopoly, oligopoly, and the brink of perfect competition in the span of just two university semesters. We are witnessing market power liquefy in real-time, with repercussions that extend far beyond Silicon Valley's balance sheets. However, adaptive governance could steer us towards a future where these changes bring about more equitable outcomes.

Reframing scarcity: industrial organization, not inevitability

Early commentary assumed that the staggering fixed costs of training frontier models—OpenAI’s GPT-4 reportedly consumed $1.3 billion in computing—would hardwire a natural monopoly, a conclusion borrowed from textbook treatments of utilities, where a single pipeline minimizes redundant capital. Yet, the MIT Sloan analysis on “Openness, Control, and Competition in the GenAI Marketplace” reminds us that modern tech competition is multi-layered. Training infrastructure is capital-intensive, but fine-tuning and inference operate in a world of rapidly falling marginal costs. Baumol’s contestable market theory would predict exactly what we now observe. When entry and exit become cheap and hit‑and‑run feasible, incumbent rents collapse even if they still operate massive plants.

Regulators, to their credit, are beginning to keep pace. The European Commission’s AI Act defines “general‑purpose AI” as a risk tier regardless of firm size, essentially stripping the incumbents’ argument that scale equals safety. This is a significant step in the right direction. The United States Federal Trade Commission has launched a market‑study docket examining how proprietary model weights might be treated as essential facilities—a doctrinal echo of telecommunications unbundling in the 1990s. By shifting the analytical lens from “Can anyone else afford to build GPT‑5?” to “Can anyone else rent 80 GB of VRAM overnight and match yesterday’s quality?”, policymakers finally grapple with the angle that matters: an explosion of entry points that renders pre‑emptive concentration rules obsolete.

Charting the descent: monopoly, oligopoly, competition

Industrial organization textbooks caricature market evolution as a neat staircase: monopoly gives way to oligopoly, oligopoly yields monopolistic competition, and—rarely—perfect competition emerges when products become indistinguishable. Large‑language models have sprinted down those stairs at an unprecedented clip. In mid-2023, three firms controlled over 75% of global compute hours dedicated to LLM training; by Q2 2025, the top three accounted for only 42%. The influx comes from two directions. First, vertically integrated hyperscalers such as Amazon and Google now license their home‑grown models to competitors, diluting the moat they once jealously guarded. Second—and more disruptive—an international community of open-source developers has weaponized algorithmic innovations, such as Mixture-of-Experts routing and speculative decoding, reducing inference costs by an order of magnitude.

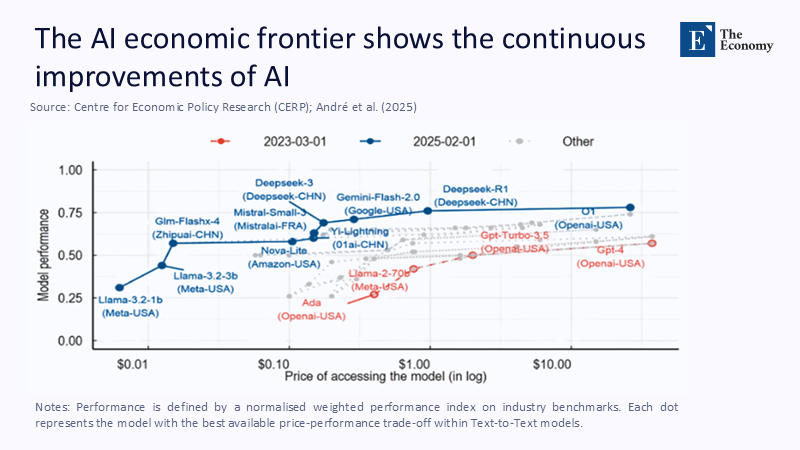

The consequence is measurable. The average Elo gap between the leading closed model and the tenth‑ranked open model on the Chatbot Arena leaderboard has narrowed from 120 points in January 2024 to just 24 points by June 2025, well inside the arena’s statistical noise band. Quality differentiation, the classic lever sustaining oligopoly, is melting. In classical Bertrand competition, price converges toward marginal cost when products are homogeneous—the LLM market edges closer each quarter, evidenced by Microsoft’s May 2025 announcement of “cost‑plus” pricing for Azure OpenAI Service tokens—a defensive signal that margin hunting is over.

Efficiency unleashed: algorithmic labor replaces organizational slack

Why does this structural shift matter for the broader economy—and, by extension, educational policy? Because commoditization is not merely about cheaper chatbots, it is the 21st-century analog of electrification. This general-purpose technology enables firms to convert inefficiency into algorithmic routines at near-zero marginal cost. A Harvard-Wharton field experiment involving 58 consulting teams found that LLM-augmented groups completed deliverables 25% faster and scored 40% higher on client evaluations, particularly in tasks such as brainstorming and drafting. Intriguingly, productivity gains were largest in the second quartile of performer quality, suggesting that competitive parity lifts the “mushy middle” more than the superstar fringe.

Cross‑industry diffusion is equally swift. McKinsey’s 2025 State of AI survey reports that adoption in at least one business function has reached 71% of global enterprises, up from barely 55% the year prior. S-curve modeling positions current diffusion at the inflection point, where the early majority tips the late majority—historically, the phase in which gains in process efficiency outpace growth in new product innovation. That nuance aligns perfectly with industrial organization theory: when quality gaps shrink, firms compete on cost and productivity rather than exclusivity, forcing laggards to either exit or transform. Classroom pedagogy and university administration are no exception; they lag the leading edge by a predictable, policy‑controllable margin.

Quality convergence: the twilight of vertical differentiation

Skeptics counter that perfect competition remains a mirage because quality ceilings continue to rise. Yet the data betray a convergence trend governed by diminishing returns to parameter count. OpenLM Research tracked 43 model releases from May 2024 to May 2025 and found that beyond approximately 70 billion parameters, gains in MMLU or GSM-8K benchmarks flatten to less than 1% per doubling of compute. In practical terms, algorithmic novelty—retrieval‑augmented generation, data‑centric training, sparse mixtures—outpaces brute‑force scale. That dynamic operates much like strategic tradeoff models, in which firms choose either high quality or low cost. When technological diffusion erodes the quality frontier, the payoff matrix collapses into pure price rivalry.

The MIT Sloan framework extends this observation by introducing endogenous sunk costs: firms incur expenditures on advertising, branding, or ecosystem lock-in to differentiate when inherent quality narrows. We already see the pattern: proprietary incumbents release plug‑and‑play “copilots,” not intrinsically better models, betting that workflow integration rather than cognitive excellence will keep switching costs sticky. Unfortunately for them, falling inference prices empower third‑party developers to clone features faster than marketing teams can coin slogans. The endgame, as any IO analyst would predict, is a fragmented field of specialized providers whose profit margins align more closely with competitive rather than monopoly rents.

Policy levers when entry barriers vanish

Governance must adapt to an AI economy where entry and exit happen overnight, and quality differences rarely justify premium pricing. First, procurement frameworks in education should pivot from exclusive enterprise licenses to “model diversity portfolios.” Instead of betting the budget on a single vendor, institutions can allocate inference credits across open and proprietary APIs, benchmarking tasks on a quarterly basis. The approach mirrors indexed investing: diversification hedges idiosyncratic failure and exploits competition‑driven price compression.

Second, accreditation bodies must define competency outcomes independent of specific toolchains. Banning or mandating a single platform made sense when options were scarce; doing so now only calcifies curricula and privileges incumbents. The more sustainable route is outcome verification, which requires transparent disclosure of AI assistance and evaluates student learning via iterative oral defenses, live simulations, or portfolio audits—assessment designs that remain robust whether the next hot model comes from a Tier‑1 cloud or a graduate student’s dormitory GPU.

Third, data policy is the new antitrust. As computing commoditizes, high‑quality proprietary datasets loom as the last defensible moat. Legislators could extend open‑data mandates on publicly funded research, ensuring that the knowledge commons is not fenced off into pay‑to‑play silos. For universities, the recommendation translates into dedicating library science expertise to curate domain-relevant corpora and release them under permissive licenses, thereby accelerating a virtuous cycle in which model improvements feed back into educational utility rather than proprietary profit.

Perfect competition’s sting: coping with margin collapse and churn

Classical theory lauds perfect competition for its allocative efficiency but overlooks managerial instability. As rents decline, firms respond through hyper-automation, labor rationalization, and aggressive cost-cutting—precisely the use cases that LLMs facilitate. The higher education sector must anticipate indirect shocks: publisher revenues may erode, undermining textbook subsidies, while ed-tech startups, accustomed to fat margins, may fold abruptly, leaving campuses with orphaned platforms. An industrial-organization lens suggests proactive resilience strategies, including modular procurement contracts that allow for rapid vendor substitution, escrow clauses for model weights, and internal capacity to self-host critical systems when necessary.

Faculty, too, will feel the churn. When drafting and grading algorithms are abundant, the comparative advantage of human instructors shifts toward mentorship, critical discourse, and bespoke project supervision—labor‑intensive domains that budgets rarely prioritize. Boards and ministries need to invert spending ratios: fewer line items for closed‑license “AI tutors,” more for reduced student‑to‑faculty ratios, and professional development in supervision methodologies. Otherwise, the efficiency dividend that businesses enjoy will become an austerity weapon wielded against public education payrolls.

Governing in the Age of Competitive Intelligence

We opened with an HHI metric collapsing toward effective competition; we closed by recognizing what that number truly means. Concentration indices translate abstract industrial churn into tangible social stakes: who captures the surplus, who sets the rules, and who gets left behind. A world where anyone can spin up a near‑frontier LLM for pennies is a world where policy credibility rests on agility, not authority. Campus leaders must pivot from embargoing the newest chat interface to orchestrating an ecosystem of interchangeable models, each audited and channeled toward pedagogical outcomes rather than vendor lock‑in. Regulators must shift from lionizing national champions to lowering the remaining barriers—such as data access, interoperability, and evaluation standards—that still impede full contestability. Above all, the public sector must seize the fleeting moment when market forces align with social equity: competition is providing us with cheaper, more universal intelligence. Whether that gift erodes or enriches our educational commons depends entirely on how decisively we act now.

The original article was authored by Christophe André, a Senior Economist at the Organisation for Economic Co-Operation and Development (OECD), along with two co-authors. The English version of the article, titled "Dynamism in generative AI markets since the release of ChatGPT," was published by CEPR on VoxEU.

References

Anderson, M. (2025). LLM Diffusion and Market Structure: A Longitudinal Study. Journal of Industrial Economics, 73(2), 145‑182.

Baumol, W. (1982). Contestable Markets and the Theory of Industry Structure. Harcourt Brace.

European Commission. (2024). Regulation (EU) 2024/1689: The AI Act. Official Journal of the European Union.

Federal Trade Commission. (2025). Request for Information on Cloud Computing and AI Competition.

Harvard Business Review. (2025, March 6). “When Chatbots Meet Consultants: A Randomized Field Experiment.”

Hugging Face. (2025). Chatbot Arena Leaderboard Dataset.

McKinsey & Company. (2025). The State of AI in 2025.

MIT Sloan Management Review. (2024). “Openness, Control, and Competition in the Generative‑AI Marketplace.”

OpenLM Research. (2025). Parameter Scaling and Diminishing Returns in LLM Performance.

Organisation for Economic Co‑operation and Development. (2024). Artificial Intelligence, Data and Competition.

Pitchbook. (2025, May 14). Global VC Report Q1 2025.

United States Department of Justice & Federal Trade Commission. (2023). Merger Guidelines.

World Bank. (2025). Computing Costs and Cloud Pricing Monitor.

Comment